Benchmarking Socket.IO Servers

You can create 4 different variations of a Socket.IO server with minimal code changes. And trust me you do NOT want to use the default one.

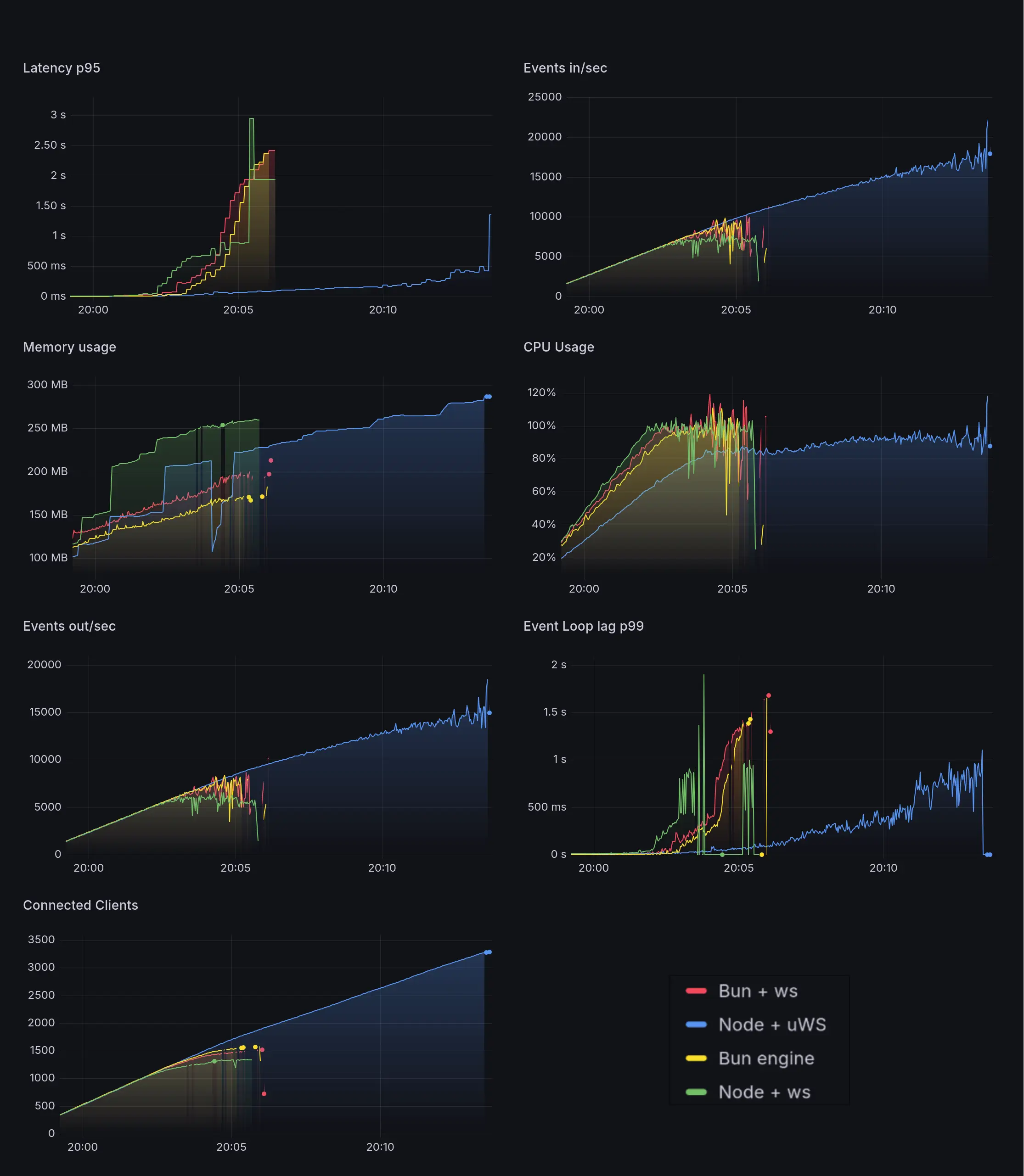

I will be comparing combinations of the runtime (Bun, Node) and the websocket server (ws, uWebSockets.js, bun engine) to see how they perform under load.

Official docs on using these servers with Socket.IO.

The Contenders:

| Label | Runtime | Websocket server |

|---|---|---|

| node-ws | Node.js 24.11.1 | ws |

| node-uws | Node.js 24.11.1 | uWebSockets.js v20.52.0 |

| bun-ws | Bun 1.3.6 | ws |

| bun-native | Bun 1.3.6 | @socket.io/bun-engine 0.1.0 |

ws is the default. It's pure JS. It's reliable. But is it fast? (Spoiler: No).

The test server is a slightly altered version of the backend of my recent project, Versus Type, a real-time PvP typing game. I just removed the Auth, rate limits, and DB calls.

For the load generator, I'm using Artillery with the artillery-engine-socketio-v3 plugin to simulate thousands of concurrent clients connecting via WebSocket and playing the game.

Server: AWS Standard B2als v2 (2 vCPUs, 4GB RAM) running Ubuntu 22.04 LTS

Attacker: AWS Standard B4als v2 (4 vCPUs, 8GB RAM) running Ubuntu 22.04 LTS

- Artillery spawns 4 virtual users per second.

- Each user hits

/api/pvp/matchmake. - The server runs a matchmaking algo to return a room ID, grouping players into rooms (max 6).

- Users connects via WebSocket, joins the room, get the game state, like passage.

- Server broadcasts the countdown to start the game, players wait until it reaches 0.

- Users emits keystroke at 60 WPM (1 event/200ms).

- For every keystroke, server validates it, updates state, and broadcasts to everyone in the room.

- Users sends a ping event every second for latency tracking.

The passage is long enough to ensure no games end before the benchmark is finished.

This is a simplified version. The server does much more, like broadcasting system messages and wpm updates every second, etc.

Github repo including server, client and result data.

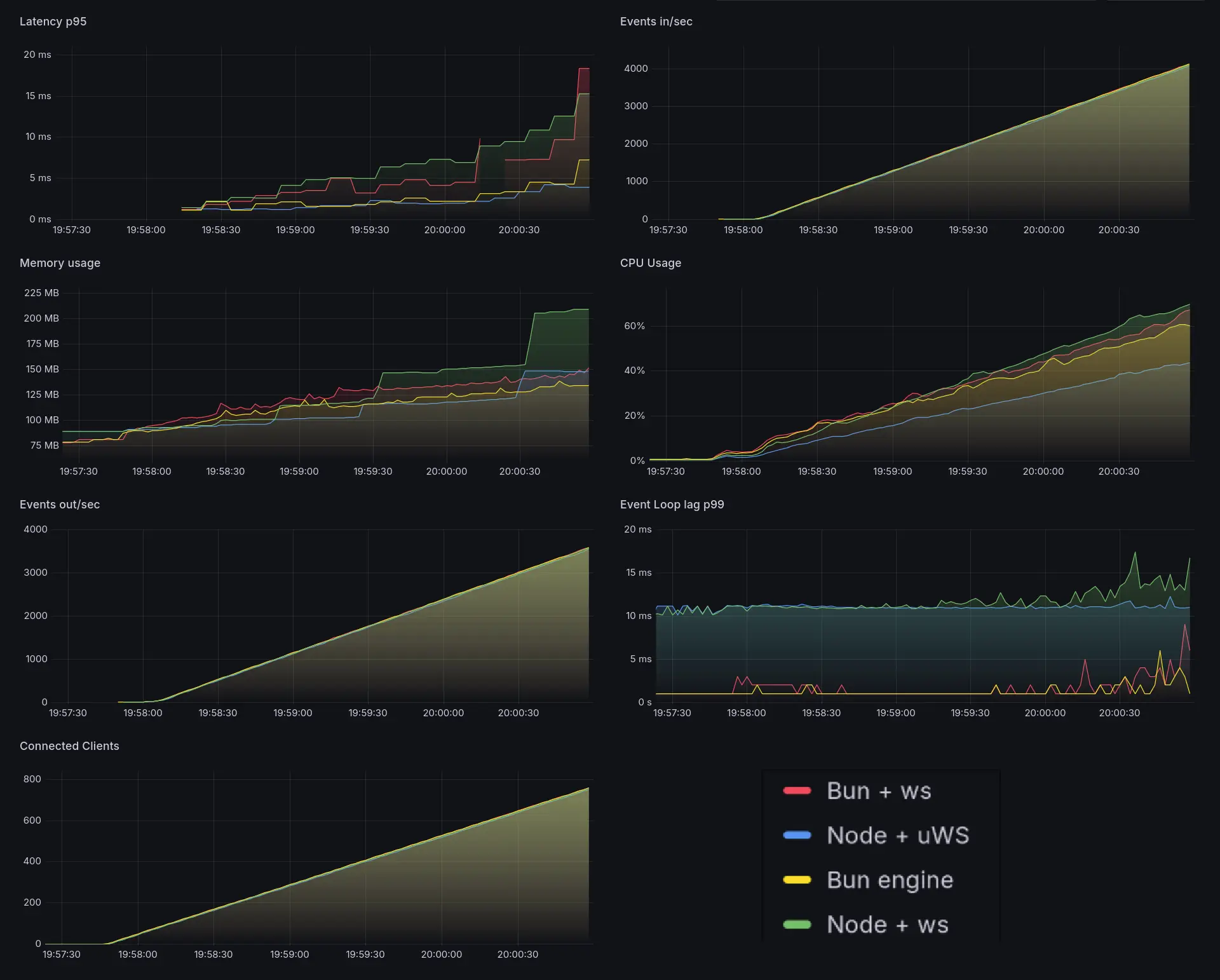

Winner: Node + uWS (Blue Line)

It outperformed everyone in every metric except memory usage, where Bun took the lead

The bun servers have significantly low event loop lag (~0ms) than node servers. node-uws is most stable tho.

The ws servers (both bun and node) latency(p95) is creeping up upto 15-20ms. The other two are rock solid ~5ms.

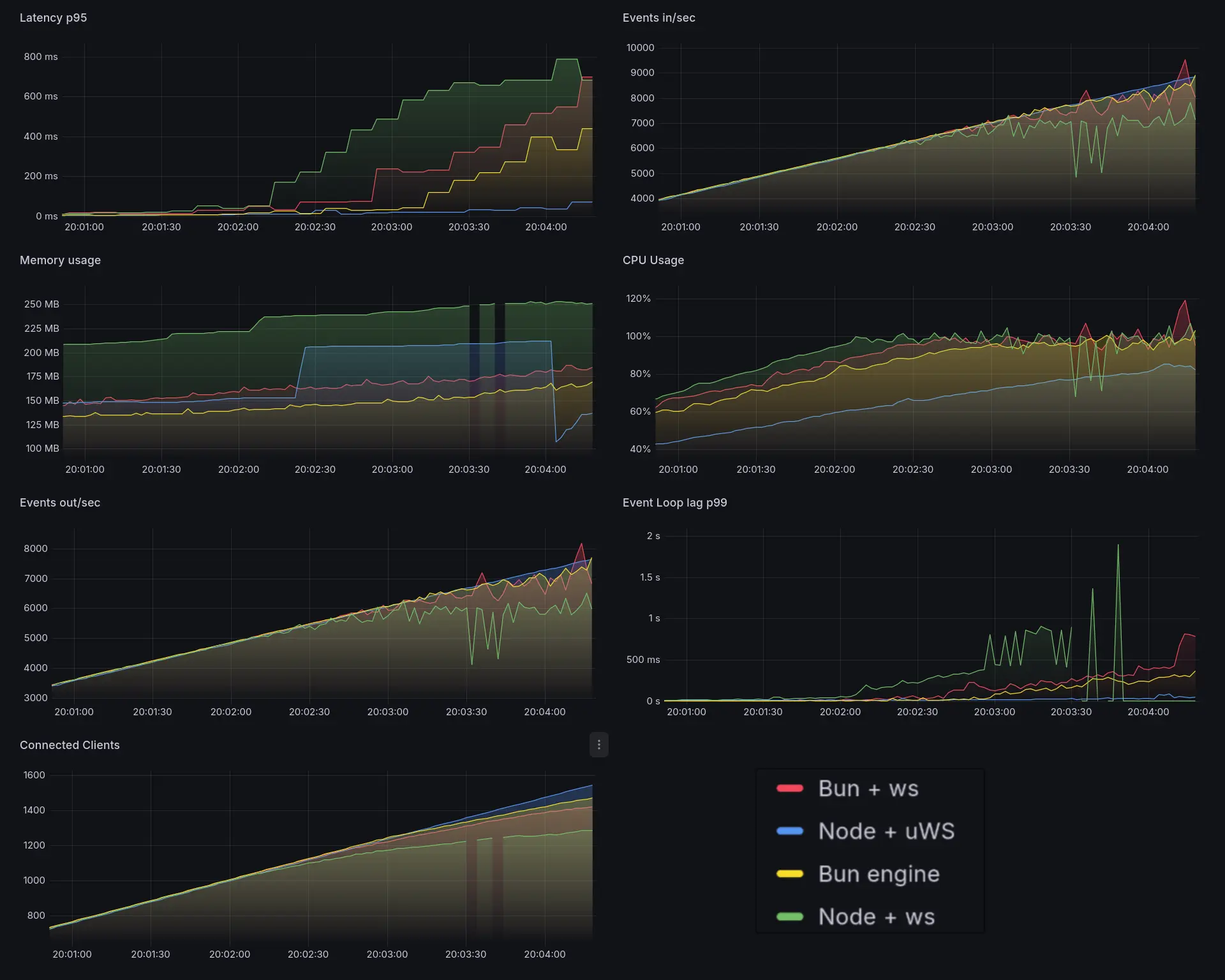

node-ws explodes. latency spikes very early(~1k clients), followed by bun-ws and bun-native.

same with event loop lag.

CPU usage goes to 100% for node-ws on ~1k clients, bun-ws ~1.2k clients, bun-native ~1.3k clients.

node-uws at just 80% CPU at 1.5k clients. It's rising at the nearly same rate as others tho.

The throughput becomes unstable for all except node-uws.

Memory usage is interesting. For some reason, node-uws one dipped like crazy. Not sure why. It builds back up tho.

The bun servers are using less memory overall. Bun's memory management is impressive.

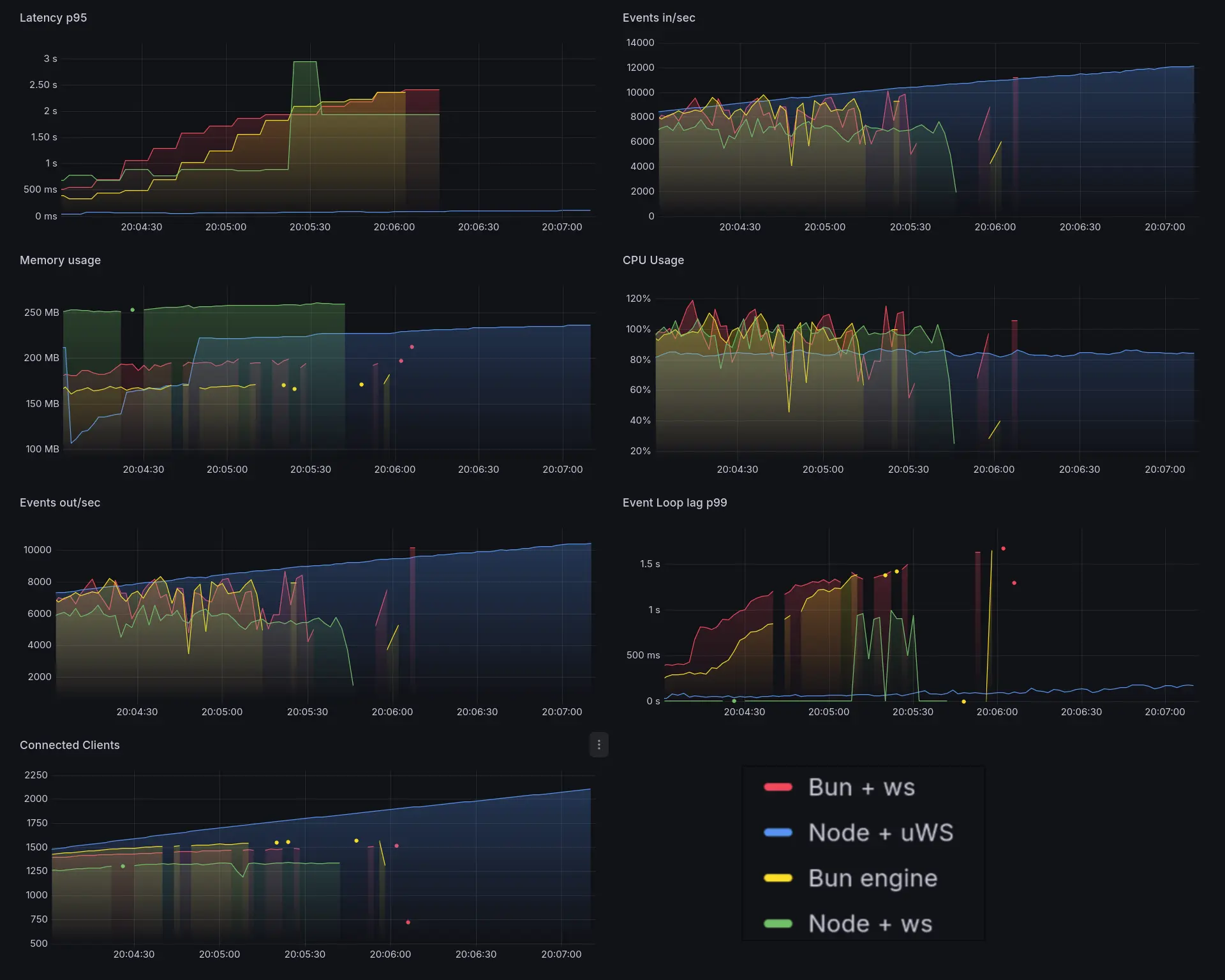

Basically node-ws just can't handle the load. You can see the server metrics missing in some places. Meanwhile node-uws is just chilling with flat latency and event loop lag.

node-ws, bun-ws, and bun-native are all now effectively dead. Latency is through the roof.

It's interesting to see that node-uws is at constant ~80% CPU usage for the entire range. It's still chilling with low latency.

Latency p95 of node-ws stayed constant for some time, lower than bun-native. This is likely because the metrics didn't get recorded and due to the nature of artillery(pushgateway), it shows the last recorded value until a new one comes in.

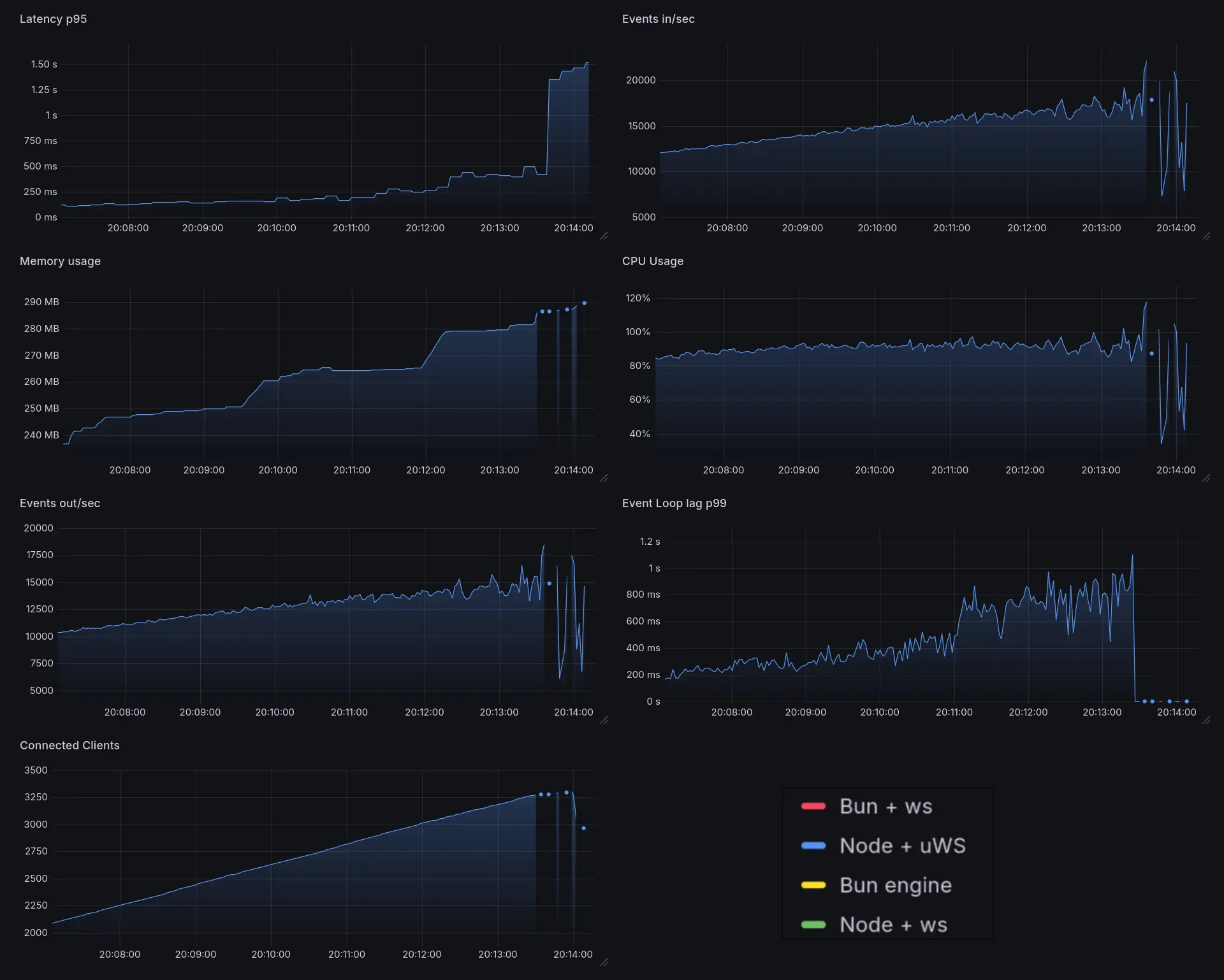

node-uws is the only one still standing. It's at ~90-100% CPU now.

Throutput starts to become less stable, and latency slowly creeps up. It goes dead after ~3250 clients.

We can say it could handle a solid 3000-3100 concurrent clients just fine, more than double the next best(bun-native).

CSVs are available here on github

It's a surprise to see bun-native get absolutely destroyed here, because Bun websocket server uses uWebSockets under the hood.

I don't exactly know the reason why, but it might be because @socket.io/bun-engine is still very new (v0.1.0) and may have inefficiencies and abstraction layers that add overhead.